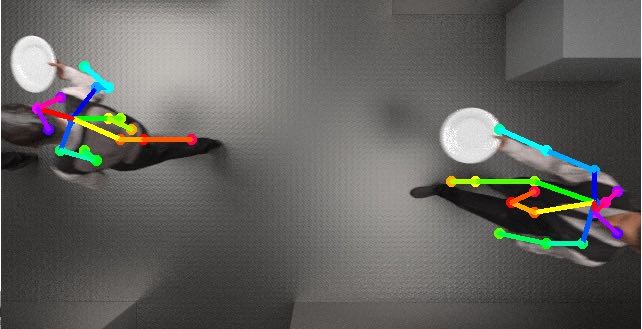

During an internship at computer vision startup MoonVision GmbH, the task of reliably tracking plates in the hands of waiters came up. The single ceiling-mounted camera meant that plates could be easily occluded temporarily by body parts. I had the idea to solve this problem by jointly tracking the plates and partial skeletons (head, shoulders, arms). Initial experimentation with an existing implementation of realtime multi pose estimation did not give satisfactory results, which I blamed on the very different viewing angle (top view vs. mostly human-like eye level views) of the problem at hand compared to the training data used for the pretrained net. I therefore created a setup to synthetically generate training data by building a similar scene and automatically annotating the joint positions which could be used for training the pose estimation network. Experiments resulted in good generalization within the 3d scene when using different textures, but the project has not been developed to a point where generalization to real footage could be achieved. I still believe that this approach could be very useful for certain applications and I hope to get the opportunity to invest time into this approach again in the future.